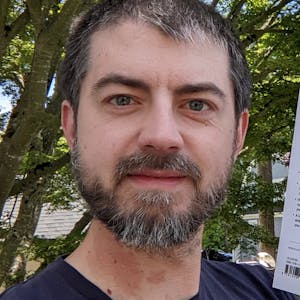

Thanks for a great talk. I'm Phil Hawksworth, head of developer relations at Deno. I'll be available for Q&A later. Connect with me on social media at philhawksworth. So he took Node and he split it, and he sorted it, and joined it, and Deno was born. But naming things is hard. I've been building for the web for 25 years. Across different places, I've observed that web developers tend to follow trends over the years. JavaScript has become ubiquitous, powering the web from the front end to the server and beyond. Node.js has played a significant role in bringing JavaScript into the mainstream, increasing its resilience. The natural evolution of JavaScript is exciting, with new tools emerging. Personally, I'm cautious about adopting new tools, but I stumbled upon Deno while building edge functions on Netlify. It is powered by Deno under the hood and resembles JavaScript. I was writing functions in JavaScript and TypeScript without realizing that Deno was powering them under the hood. I got excited about using a site generator called Loom, which led me to discover Deno. Installing Deno and running its commands got me started. Deno is an open source JavaScript, TypeScript, and WebAssembly runtime with secure defaults. It's built on V8, Rust, and Tokyo and offers a JavaScript runtime, TypeScript toolchain, package manager, and task runner. Deno is a tool that encompasses various functionalities, including testing, linting, formatting, benchmarking, and compiling. Its API is based on web standards, making it familiar to web developers. Enabling Deno in your IDE provides insights into your code and hinting across the Deno ecosystem. Deno package management allows you to import packages from the NPM or the node ecosystem to ease the transition. Managing dependencies and updating packages is simplified with Deno. Deno clean purges the cache, allowing gradual repopulation. Tasks in Deno are managed in Deno.json and can be run using the commands run or task. Deno's built-in test framework allows for running tests using the command denotest. Deno includes a built-in TypeScript and JavaScript toolchain, eliminating the need for additional configuration. Deno allows running TypeScript files without the need for compilation. It offers tools for type checking, generating type definitions, formatting code, and linting. Deno simplifies and unifies various tools, making it easier for teams to adopt a consistent toolset. Deno's security model and performance are robust. Explore examples, tutorials, the Deno Discord, Blue Sky updates, and a deep dive video to learn more about Deno. Thank you for your time!